TL;DR: We are somewhat uncomfortable with prompt engineering, because it’s hard to know how accurate a given prompt really is, and responses can be inconsistent and unreliable. There are ways to mitigate this.

There are a lot of factors you could consider in evaluating Large Language Models (LLMs) in contract analysis. Accuracy is likely near the top of any list. Recently, we wrote a piece evaluating how GPT-4 is at finding information in contracts. If thinking about LLM accuracy, there are two other things you should consider: measurability and predictability of accuracy. This piece will go into detail about these.

Background

In our previous piece evaluating how GPT-4 is at finding information in contracts, we said we planned two additional pieces evaluating Large Language Models in contract analysis:

- What are additional advantages and disadvantages of LLMs versus other tech for contract analysis.

- Where we think LLMs can be especially helpful in contract review, and where contracts AI tech is headed.

Silly us! As we got part of the way into writing about advantages and disadvantages of LLMs in contract analysis, we realized that the piece was going to be long. Very long. We were ~25% of the way through and it was already 11 pages. While Tim Urban or Casey Flaherty may be able to engage readers through long posts, we decided to break our analysis up into multiple parts.

This piece is focused on other issues with accuracy of LLMs like GPT-4: specifically, their measurability and predictability. Further pieces will cover issues including:

- How LLMs actually run on collections of documents, and implications for cost and scalability

- Trainability and pre-train-ed-ness

- Output format (and where chat is and is not well-suited)

Our takeaway from testing was that GPT-4 is impressive overall, but—on contract review tasks—it’s inconsistent and makes mistakes, and is probably not yet ready as a standalone approach if predictable accuracy matters. The piece has a LOT more details on how we got there, if you’re interested.

- Hosting options

- Potential copyright challenges

As in our previous piece, we use “Large Language Models,” “LLMs,” and “Generative AI” interchangeably. We recognize that this isn’t a great equivalence.

In our pieces, we try to lay out a framework for evaluating different technological approaches to pulling information from contracts. A few additional points:

- We mostly focus on GPT-3.5/4, but our intention is that the framework could work well for comparing other LLMs and tech approaches too, since it looks like this is soon to be an even more crowded field of product offerings.

- We are most experienced in contract analysis, but our guess is the same analysis applies to other information retrieval domains, including in the legal tech field. eDiscovery comes to mind.

If you don’t know us, you may wonder why we’re worth reading on this topic at all. Here’s a short segment (from the previous piece) on this.

Measurability of Accuracy

One way to write off our (popular, based on number of views!) “How is GPT-4 at Contract Analysis?” post is to say “if you used better prompts, then you could have gotten better results.” We got this comment a couple times on LinkedIn.

This fits with a broader theme: the idea that better prompts are key to getting good performance out of LLMs. While good prompts obviously matter, we think this issue is more complex than having a good prompt engineer around.

|  |

|---|

Despite Large Language Model approaches being very new, there are even multiple how-to books on prompt engineering.

Thinking that “all you need to do is use a different prompt" misses one of the most problematic aspects of using GPT-3.5/4 for contract review (or other) work:

It is very hard to determine how accurate a given prompt is. It’s relatively easy to take a given clause that GPT-4 missed in our tests, design a different prompt, and get the right result on a specific clause example. The problem is that you won’t know how the prompt will perform on other clauses that aren’t right in front of you. Your improved prompt might work better on known-knowns (e.g., the results GPT-4 missed in my tests). But it’s hard to tell if it works better on known-unknowns (results in contracts you haven’t reviewed but need to). In fact, your new improved prompt might actually work worse on known-unknowns, and you would have a hard time telling if this was so.

This is especially problematic because it doesn’t really matter how well a prompt works on a known-known clause—if you already know the answer, you don’t need an LLM to get it for you. In contract analysis use cases we can think of, it’s the known-unknowns that matter. Contract analysis AI tech helps users by reviewing new agreements, finding the known-unknowns in them. For example, having the tech finding change of control, exclusivity, price increase terms in a batch of agreements.

Measuring Accuracy

Accuracy in contract analysis is usually measured by “recall” (misses aka false negatives) and “precision” (false positives). Most contract analysis users we’ve interacted with care a lot about accuracy, and they tend to be more concerned with recall, even at the expense of extra false positives—they worry a lot about misses. Of course, every use case is different. If, for example, you plan to put results directly into a contract management system without human review, you may need to have higher precision (less false positives). And, if the specific provision is relatively low risk, then you may be more willing to accept some misses (lower recall).

We think accuracy is such an important issue for contracts AI systems that we devoted thousands of words to it in our last GPT post (and feel that we only scratched the surface). If you plan to use AI in a workflow where accuracy matters, then you should determine accuracy of systems you’re considering. Determining accuracy may be easy or not, depending on how you are interacting with the generative AI:

- Fine-tuning models. If you are fine-tuning a OpenAI model, it is possible to have the OpenAI API return validation numbers measuring the accuracy of your fine-tuned model. Other LLMs can be fine tuned too; validation ability should differ.

- Interacting via prompts. If instead of fine tuning models you are working with an LLM directly via prompts, it is possible to set up a testing framework for prompts. For example, an appropriate human could annotate a bunch of results, then different prompts could be tested against these results, and results could be tracked from different prompts in a spreadsheet (or the like). It will not be easy to do this well. You need to beware of “overfitting” prompts to the data, and should try to guard against this in your validation. This matters. In the early days of Kira, we switched our built-in model validation system from a holdout set to crossfold validation, which considered a larger, more robust pool of results. Our accuracy numbers instantly dropped by 20%. Our models didn’t actually get worse - we got better at measuring how accurate they were (and eventually got the models more accurate, partially spurred by our better understanding of our inaccuracy).

If trying to run your own validation, try to have a large pool of human-annotated results to test against. Try to make the testing examples as diverse as those you will see in production. See if you can rotate testing data to ward against overfitting. Note that this is even trickier than it sounds since automatic matching of responses is dicey. Specifically, there may not be a 1:1 correspondence between ideal response and generated response. In the IR/ML/NLP literature, there are metrics which try to do this (e.g., BLEU, ROUGE) by looking at (typically) word overlap between the gold standard and the machine generated.

- Interacting via untested prompts in chat. If you are interacting with a GPT model via chat, most likely you’re using your best guesses, perhaps informed by what you’ve seen work in the past, and are unlikely to be rigorously testing these prompts against known-unknowns (unless you’re using pre-tested/validated prompts). How do you know how accurate your prompt is? Experience may help, but we suggest that users be honest with themselves about whether this is more prompt “engineering” or “guessing / intuition.”

Which Prompt is Better?

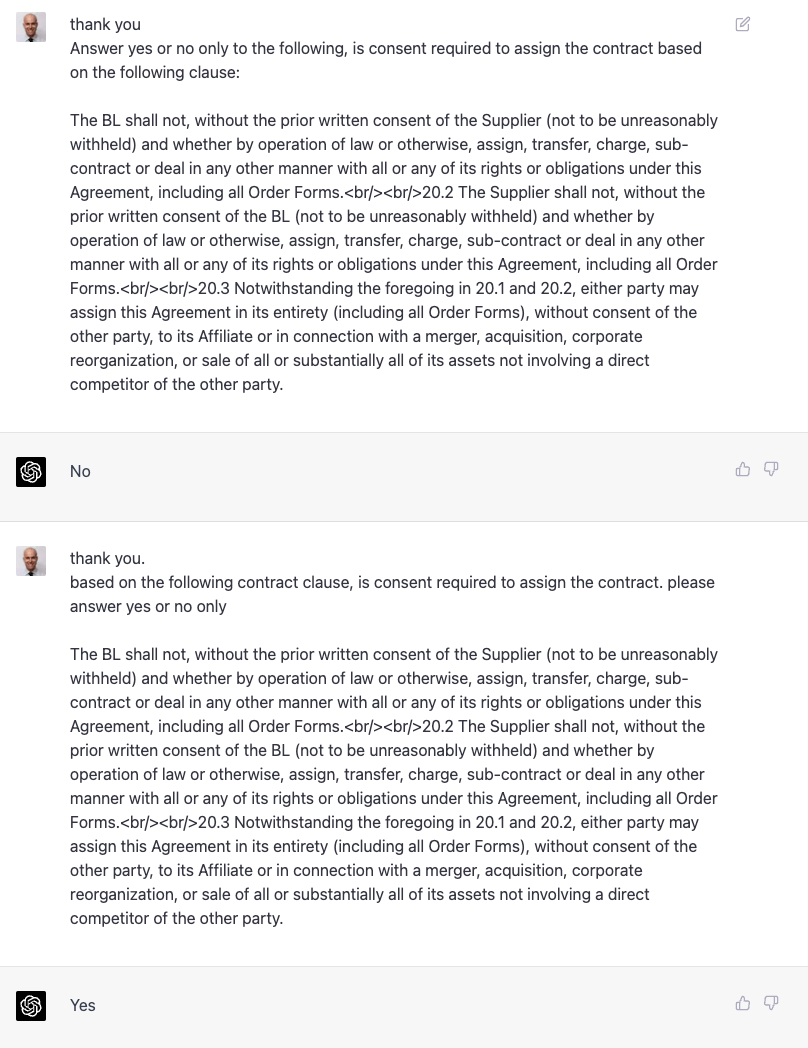

In our evaluation of GPT-4, we saw different responses based on the specific wording of the prompt. This sounds pretty typical of Generative AIs (and, in fact, is why prompt engineering has become so important for Generative AI users). In the below example, Adam devised the first prompt. When GPT-4 answered incorrectly, Noah came up with the second prompt.

Was Noah’s prompt really better than Adam’s, or did it just work this time, or on this specific test? Can we be sure that Adam’s wouldn’t have worked better on the next sample clause, or the one after that? Noah is skeptical that his prompt was objectively better than Adam’s. Adam has a computer science PhD, over a decade of research and work experience in the field of information retrieval (mostly eDiscovery and contract analysis), and we suspect most people who have worked with him (Noah included) would think Adam could design at least as good a prompt as they would. Yet here Noah’s prompt got the correct answer and Adam’s didn’t. The easy answer is that Noah’s prompt is better. But it seems equally likely to us that Adam’s prompt might get the correct answer on the next clause and Noah’s fail. That is pretty problematic, if you plan to use LLMs in a production setting where accuracy matters. The only way to know which prompt is actually better is to test over a sufficient test set².

Prompt engineering reminds Noah of his early days in contract analysis. At the time, there was disagreement in the industry between using rules and machine learning to find information in contracts (with Noah and his co-founder Dr. Alexander Hudek very firmly arguing that machine learning was better suited to this task³). Noah remembers one vendor claiming that their rules-based system was 100% accurate, because it found 100% of things that the rule described. LOL! For one conference talk that Alex and Noah did together, Alex built a rules prompt interface, which enabled users to run a rule against a database of [100-ish] agreements human annotated for confidentiality terms (generally a pretty easy clause to find). Users could suggest a rule, Alex would enter it and run it in front of them, and the rule would return recall and precision results against the human (Noah)-annotated clauses. We also included the comparable machine learning model accuracy alongside - for good reason, since the machine learning-based results were consistently better. Here, GPT appears to be a lot more powerful than rules, but it’s not perfect⁴, and users need to be careful to ensure they know what they’re getting with their prompts.

Ultimately, we suspect that prompt engineering will become more rigorous, with pre-vetted prompts becoming more popular relative to informal chat prompts⁵. Relatedly, we also suspect we’ll see more use of LLMs with pre-canned prompts and less chat. More discussion of that to come in a forthcoming post.

Prompts will become yet another programming language. This is inevitable for anything other than "casual" use.

— Jimmy Lin (@lintool) February 8, 2023

Predictability of Accuracy

Predictability of accuracy is a further issue with using Generative AIs. Generative AIs can answer the exact same prompt differently. This is why, in ChatGPT, there is a “Regenerate Response” button. Most Generative AIs have a thing called “temperature” that limits (to an extent) the amount of randomness that will be generated for an entire prompt. In some ways, regeneration is a neat feature. But since responses can differ each time a prompt runs, if using an LLM for data extraction, this means that accuracy can differ too. Inconsistent results have the potential to exacerbate the potential overconfidence-in-prompt issue discussed earlier, in that even if you were confident in your prompt, you would have to know that it might yield different results if you ran it again. Of course, there are lots of things like this - flip a coin a few times, it might come out different, but flip it enough and it should come out 50:50. If using a pre-tested prompt, it should likely also mostly work as expected over time, at least for a specific version of an LLM (but may not for new LLM versions or different LLMs). However, we would feel less confident if simply entering prompts into a chat box.

Note that this mutability of response isn’t innate to AIs generally. Supervised machine learning approaches are generally deterministic: a model will spit out the same results on the same text, every time (with some small exceptions⁶).

Conclusion

GPT-3.5/4 is really amazing tech. We remain very impressed. Even though these LLMs have limitations, they have really provoked our thinking of what’s possible in contract analysis. We have lots more to write (and do) on this.

1. Our takeaway from testing was that GPT-4 is impressive overall, but—on contract review tasks—it’s inconsistent and makes mistakes, and is probably not yet ready as a standalone approach if predictable accuracy matters. The piece has a LOT more details on how we got there, if you’re interested.

2. Or, perhaps, to use pre-vetted language. We discuss this more later in the section.

3. Noah even wrote a children’s book on machine learning (the world’s first?) in which a robot tries to learn to read using a rules-based approach, fails, then succeeds using a machine learning-based approach.

4. If GPT was perfect, you wouldn’t need to get the prompt right to get the right result.

5. ChatGPT moderation circumventing or jailbreaking prompts are a good example of pre-vetted prompts in action. E.g., “DAN” or “Yes Man”.

6. Possible variability with supervised machine learning models stems from the type of model and also the architecture on which it executes (i.e., models can sometimes be sensitive to architecture — so training on AMD and Intel may yield a differently performing model).